The IBTA wrapped up the four part fall Webinar Series in December, and if you didn’t have the opportunity to attend these events live, there is a recorded version available on the IBTA’s website. In the webinar series, we suggested the idea that it makes sense to take a fresh look at I/O in light of recent developments in I/O and data center architecture. We took a high level look at two RDMA technologies which were InfiniBand and a relative new comer called RoCE – RDMA over Converged Ethernet .

RDMA is an interesting network technology that has been dominant in the HPC marketplace for quite a while and is now finding increasing application in modern commercial data centers, especially in performance sensitive environments or environments that depend on an agile, cost constrained approach to computing, for example almost any form of cloud computing. So it’s no surprise that several questions arose during the webinar series about the differences between a “native” InfiniBand RDMA fabric and one based on RoCE.

In a nutshell, the questions boiled down to this: What can InfiniBand do that RoCE cannot? If I start down the path of deploying RoCE, why not simply stick with it, or should I plan to migrate to IB?”

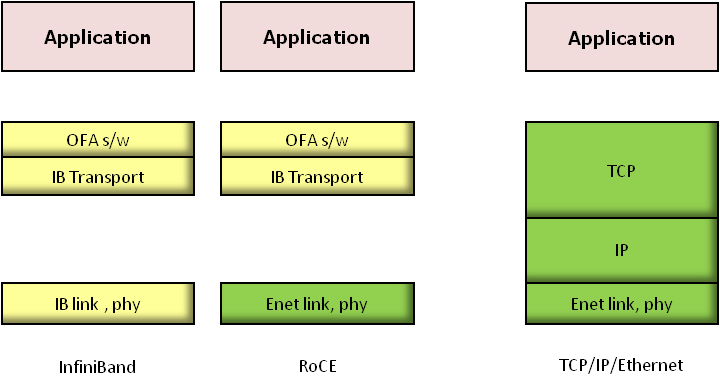

As a quick review, RoCE is a new technology that is best thought of as a network that delivers many of the advantages of RDMA, such as lower latency or improved CPU utilization, but using a Ethernet switched fabric instead of InfiniBand adapters and switches. This is illustrated in the diagram below. Conceptually, RoCE is simple enough, but there is a subtlety that is easy to overlook. Many of us, when we think of Ethernet, naturally envision the complete IP architecture consisting of TCP, IP and Ethernet. But the truth is that RoCE bears no relationship to traditional TCP/IP/Ethernet, even though it uses an Ethernet layer. The diagram also compares the two RDMA technologies to traditional TCP/IP/Ethernet. As the drawing makes clear, RoCE and InfiniBand are sibling technologies, but are only distant cousins to TCP/IP/Ethernet. Indeed, RoCE’s heritage is found in the basic InfiniBand architecture and is fully supported by the open source software stacks provided by the Open Fabrics Alliance. So if it’s possible to use Ethernet and still harvest the benefits of RDMA, what’s to choose between the two? Naturally, there are trade-offs to be made.

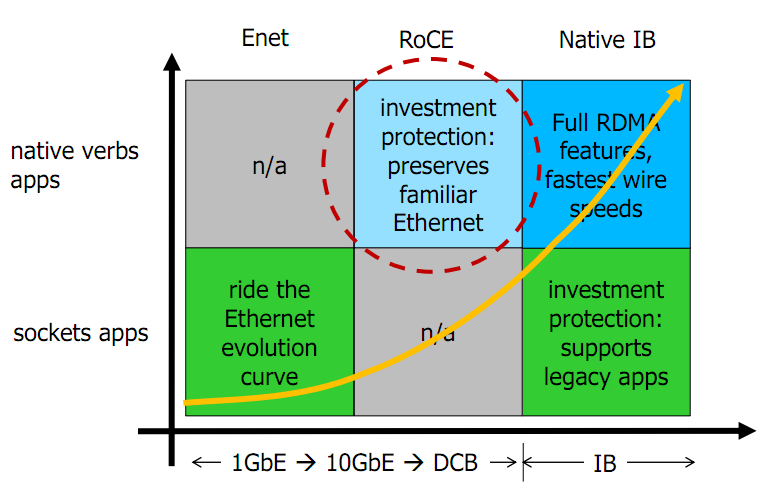

During the webinar we presented the following chart as a way to illustrate some of the trade-offs that one might encounter in choosing an I/O architecture. The first column shows a pure Ethernet approach, as is common in most data centers today. In this scenario, the data center rides the wave of improvements in Ethernet speeds. Naturally, using traditional TCP/IP/Ethernet, you don’t get any of the RDMA advantages. For this blog, our interest is mainly in the middle and right hand columns which focus on the two alternate implementations of RDMA technology.

From the application perspective both RoCE and native InfiniBand present the same API and provide about the same sets of services. So what are the differences between them? They really break down into four distinct areas.

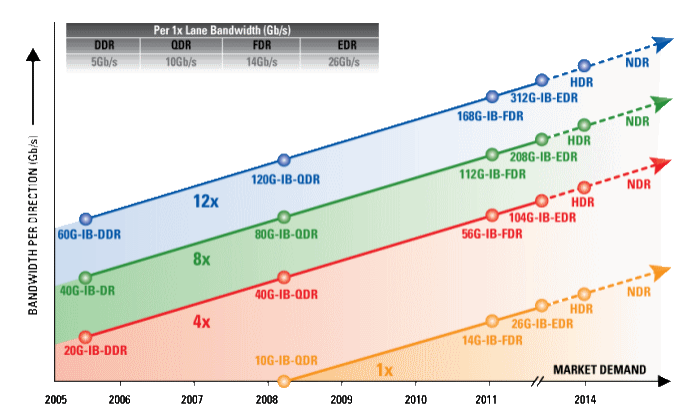

- Wire speed and the bandwidth roadmap. The roadmap for Ethernet is maintained by the IEEE and is designed to suit the needs of a broad range of applications ranging from home networks to corporate LANs to data center interconnects and even wide area networking. Naturally, each type of application has unique requirements and different speed requirements. For example, client networking does not have the speed requirements that are typical of a data center application. Of this wide range of applications the Ethernet roadmap naturally tends to reflect the bulk of its intended market, even though speed grades more representative of data center needs (40 and 100GbE) have recently been introduced. The InfiniBand roadmap on the other hand, is maintained by the InfiniBand Trade Association and has one focus, which is to be the highest performance data center interconnect possible. Commodity InfiniBand components (NICs and switches) at 40Gb/s have been in wide distribution for several years now, and a new 56Gb/s speed grade has recently been announced. Although the InfiniBand and Ethernet roadmaps are slowly converging, it is still true that the InfiniBand bandwidth roadmap leads the Ethernet roadmap. So if bandwidth is a serious concern, you would probably want to think about deploying an InfiniBand fabric.

InfiniBand Speed Roadmap

- Adoption curve. Historically, next generation Ethernet has been deployed first as a backbone (switch-to-switch) technology and eventually trickled down to the end nodes. 10GbE was ratified in 2002, but until 2007 almost all servers connected to the Ethernet fabric using 1GbE, with 10GbE reserved for the backbone. The same appears to be true for 40 and 100GbE; although the specs were ratified by the IEEE in 2010, an online search for 40GbE NICs reveals only one 40GbE NIC product in the marketplace today. Server adapters for InfiniBand on the other hand, are ordinarily available coincident with the next announced speed bump allowing servers to connect to an InfiniBand network at the very latest speed grades right away. 40Gb/s InfiniBand HCAs, known as QDR, have been available for a number of years now, and new adapter products matching the next roadmap speed bump, known as FDR, were announced at SC11 this past fall. The important point here is that one trade-off to be made in deciding between RoCE and native InfiniBand is that RoCE allows you to preserve your familiar Ethernet switched fabric, but at the price of a slower adoption curve compared to native InfiniBand.

- Fabric management. RoCE and InfiniBand both offer many of the features of RDMA, but there is a fundamental difference between an RDMA fabric built on Ethernet using RoCE and one built on top of native InfiniBand wires. The InfiniBand specification describes a complete management architecture based on a central fabric management scheme which is very much in contrast to traditional Ethernet switched fabrics, which are generally managed autonomously. InfiniBand’s centralized management architecture, which gives its fabric manager a broad view of the entire layer 2 fabric, allows it to provide advanced fabric features such as support for arbitrary layer 2 topologies, partitioning, QoS and so forth. These may or may not be important in any particular environment, but by avoiding the limitations of the traditional spanning tree protocol, InfiniBand fabrics can maximize bi-sectional bandwidth and thereby take full advantage of the fabric capacity. That’s not to say that there are not proprietary solutions in the Ethernet space, or that there is no work underway to improve Ethernet management schemes, but again, if these features are important in your environment, that may impact your choice of native InfiniBand compared to an Ethernet-based RoCE solution. So when choosing between an InfiniBand fabric and a RoCE fabric, it makes sense to consider the management implications.

- Link level flow control vs. DCB. RDMA, whether native InfiniBand or RoCE, works best when the underlying wires implement a so-called lossless fabric. A lossless fabric is one where packets on the wire are not routinely dropped. By comparison, traditional Ethernet is considered a lossy fabric since it frequently drops packets, relying on the TCP transport layer to notice these lost packets and to adjust for them. InfiniBand, on the other hand, uses a technique known as link level flow control, which ensures that packets are not dropped in the fabric except in the case of serious errors. This technique helps explain much of InfiniBand’s traditionally high bandwidth utilization efficiency. In other words, you get all the bandwidth for which you’ve paid. When using RoCE, you can accomplish almost the same thing by deploying the latest version of Ethernet sometimes known as Data Center Bridging, or DCB. DCB comprises five new specifications from the IEEE which taken together provide almost the same lossless characteristic as InfiniBand’s link level flow control. But there’s a catch; to get the full benefit of DCB requires that your switches and NICs implement the important parts of these new IEEE specifications. I would be very interested to hear from anybody who has experience with these new features in terms of how complex they are to implement in products, how well they work in practice, and if there are any special management challenges.

As we pointed out in the webinars, there are many practical routes to follow on the path to an RDMA fabric. In some environments, it is entirely likely that RoCE will be the ultimate destination, providing many of the benefits of RDMA technology while preserving major investments in existing Ethernet. In some other cases, RoCE presents a great opportunity to become familiar with RDMA on the way toward implementing the highest performance solution based on InfiniBand. Either way, it makes sense to understand some of these key differences in order to make the best decision going forward.

If you didn’t get a chance to attend any of the webinars or missed one of the parts, be sure to check out the recording here on the IBTA website. Or, if you have any lingering questions about the webinars or InfiniBand and RoCE, email me at pgrun@systemfabricworks.com.

Paul Grun

System Fabric Works