(Note: This article appears with reprint permission of The Exascale Reporttm)

InfiniBand has been making remarkable progress in HPC, as evidenced by its growth in the Top5002 rankings of the highest performing computers. In the November 2010 update to these rankings, InfiniBand’s use increased another 18 percent, to help power 43 percent of all listed systems, including 57 percent of all high-end “Petascale” systems.

The continuing march for higher and higher performance levels continues. Today, computation is a critical part of science, where computation compliments observation, experiment and theory. The computational performance of high-end computers has been increasing by a factor of 1000X every 11 years.

InfiniBand has demonstrated that it plays an important role in the current Petascale level of computing driven by its bandwidth, low latency implementations and fabric efficiency. This article will explore how InfiniBand will continue to pace high-end computing as it moves towards the Exascale level of computing.

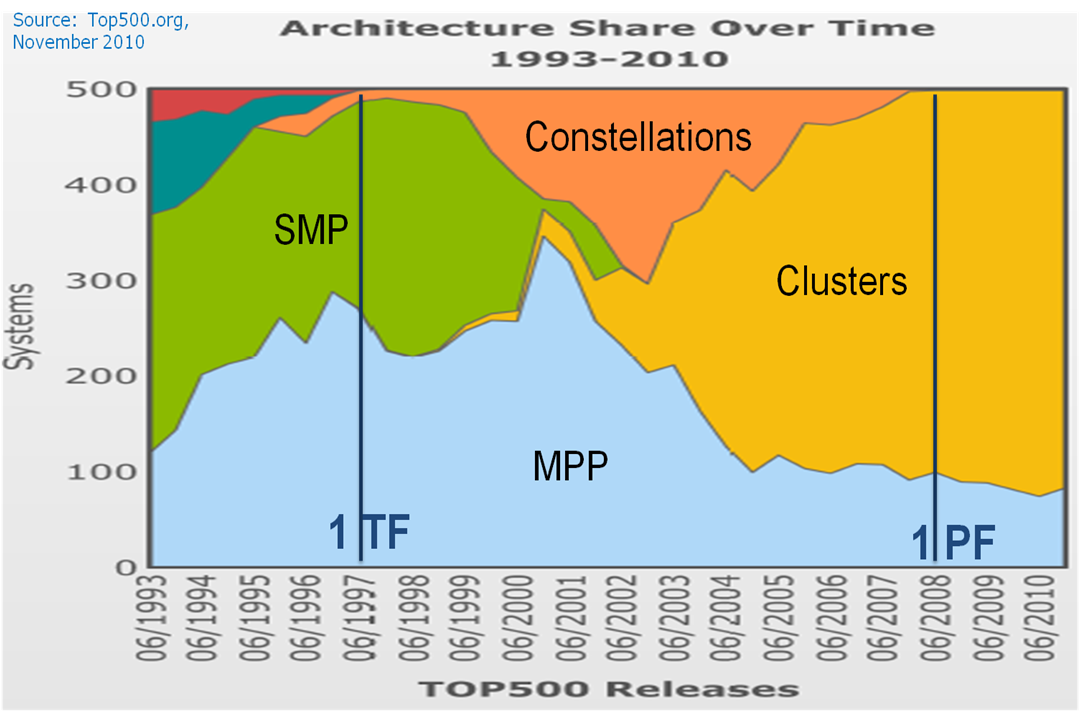

Figure 1 – The Golden Age of Cluster Computing

InfiniBand Today

Figure 1 illustrates how the high end of HPC crossed the 1 Terascale mark in 1997 (1012 floating operations per second) and increased three orders of magnitude to the 1 Petascale mark in 2008 (1015 floating operations per second). As you can see, the underlying system architectures changed dramatically during this time. The growth of the cluster computing model, based on commodity server processors, has come to dominate much of high-end HPC. Recently, this model is being augmented by the emergence of GPUs.

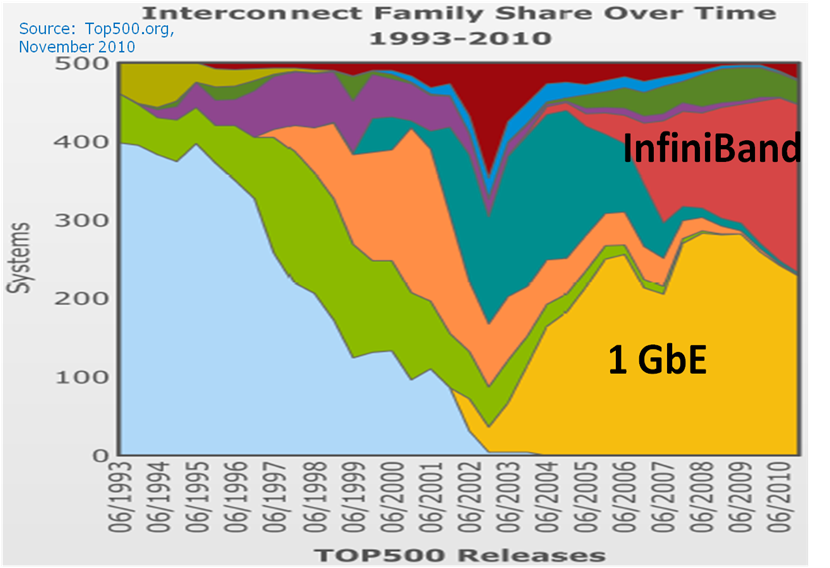

Figure 2 – Emergence of InfiniBand in the Top500

Figure 2 shows how interconnects track with changes in the underlying system architectures. The appearance of first 1 GbE, followed by the growth of InfiniBand interconnects, are key enablers of the cluster computing model. The industry standard InfiniBand and Ethernet interconnects have largely displaced earlier proprietary interconnects. InfiniBand interconnects continue to grow share relative to Ethernet, largely driven by performance factors such as low latency and high bandwidth, the ability to support high bisectional bandwidth fabrics, as well as overall cost-effectiveness.

Getting to Exascale

What we know today is that Exascale computing will require enormously larger computer systems than what are available today. What we don’t know is how those computers will look. We have been in the golden age of cluster computing for much of the past decade and the model appears to scale well going forward. However, there is yet no clear consensus regarding the system architecture for Exascale. What we can do is map the evolution of InfiniBand to the evolution of Exascale.

Given historical growth rates, Exascale computing is being anticipated by the industry to be reached around 2018. However, three orders of magnitude beyond where we are today represents too great a change to make as a single leap. In addition, the industry is continuing to assess what system structures will comprise systems of that size.

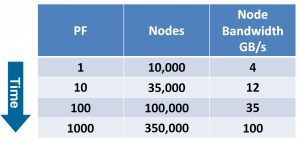

Figure 3 – Steps from Petascale to Exascale

Figure 3 provides guidance as to the key capabilities of the interconnect as computer systems increase in power by each order of magnitude from current high-end systems with 1 PetaFLOPS performance, to 10 PF, 100 PF and finally 1000PF = 1 ExaFLOPS. Over time, computational nodes will provide increasing performance with advances in processor and system architecture. This performance increase must be matched by a corresponding increase in network bandwidth to each node. However, the increased performance per node also tends to hold down the increase in the total number of nodes required to reach a given level of system performance.

Today, 4x QDR InfiniBand (40 Gbps) is the interconnect of choice for many large-scale clusters. Current InfiniBand technology well supports systems with performance in the order of 1 PetaFLOPS. Deployments in the order of 10,000 nodes have been achieved, and 4x QDR link bandwidths are offered by multiple vendors. InfiniBand interconnects are used in 57 percent of the current Petascale systems on the Top500 list.

Moving from 1 PetaFLOPS to 10 PetaFLOPS is well within the reach of the current InfiniBand roadmap. Reaching 35,000 nodes is within the currently-defined InfiniBand address space. Required 12 GB/s links can either be achieved by 12x QDR, or more likely, by 4x EDR data rates (104 Gbps) now being defined according to the InfiniBand industry bandwidth roadmap. Such data rates also anticipate PCIe Gen3 host connects, which are anticipated in the forthcoming processor generation.

The next order of magnitude increase in system performance from 10 PetaFLOPS to 100 PetaFLOPS will require additional evolution of the InfiniBand standards to permit hundreds of thousands of nodes to be addressed. The InfiniBand industry is already initiating discussions as to what evolved capabilities are needed for systems of such scale. As in the prior step up to more performance, required link bandwidths can be achieved by 12x EDR (which is currently being defined) or perhaps 4x HDR (which has been identified on the InfiniBand industry roadmap). Systems of such scale may also exploit topologies such as mesh/torus or hypercube, for which there are already large scale InfiniBand deployments.

The remaining order of magnitude increase in system performance from 100 PetaFLOPS to 1 ExaFLOPS requires link bandwidths to once again increase. Either 12x HDR, or 4X NDR links will need to be defined. It is also expected that optical technology will play a greater role in systems of such scale.

The Meaning of Exascale

Reaching Exascale computing levels involves much more than just the interconnect. Pending further developments in computer systems design and technology, such systems are expected to occupy many hundreds of racks and consume perhaps 20 MWatts of power. Just as many of the high-end systems today are purpose-built with unique packaging, power distribution, cooling and interconnect architectures, we should expect Exascale systems to be predominantly purpose-built. However, before we conclude that the golden age of cluster computer has ended with its reliance on effective industry standard interconnects such as InfiniBand, let’s look further at the data.

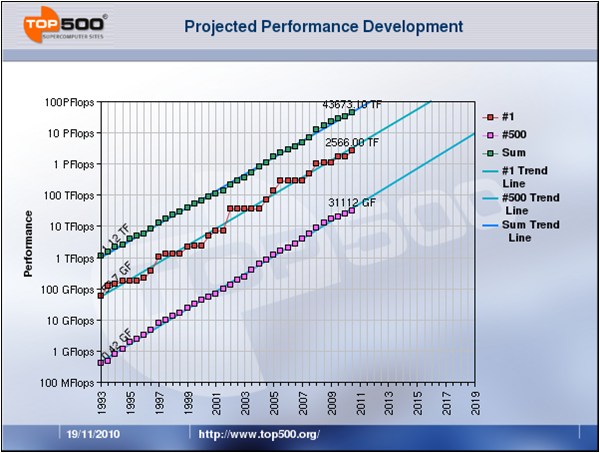

Figure 4 – Top500 Performance Trends

Figure 4 is the trends chart from Top500. At first glance, it shows the tremendous growth over the past two decades of high-end HPC, as well as projecting these trends to continue for the next decade. However, it also shows that the performance of the #1 ranked system is about two orders of magnitude greater than the #500 ranked system.

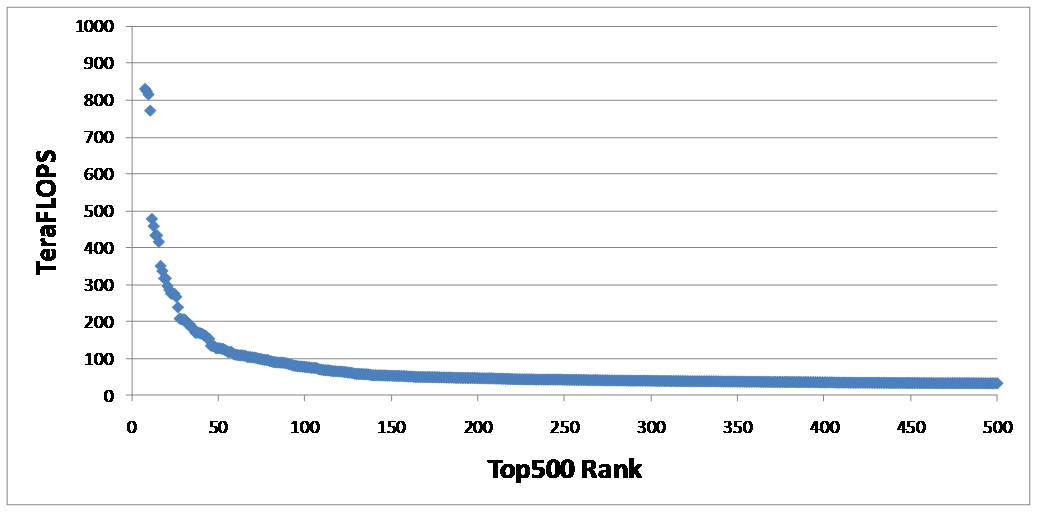

Figure 5 – Top500 below 1 PetaFLOPS (November 2010)

This is further illustrated in Figure 5, which shows the performance vs. rank from the November 2010 Top500 list – the seven systems above 1 PetaFLOPS have been omitted so as not to stretch the vertical axis too much. We see that only the highest 72 ranked systems come within an order of magnitude of 1 PetaFLOPS (1000 TeraFLOPS). This trend is expected to continue with the implication that once the highest-end HPC systems reach the 1 Exascale threshold, the majority of Top500 systems will be a maximum of order of 100 PetaFLOPS, with the #500 ranked system at an order of 10 PetaFLOPS.

Although we often use the Top500 rankings as an indicator of high-end HPC, the vast majority of HPC deployments occur below the Top500.

InfiniBand Evolution

InfiniBand has been an extraordinarily effective interconnect for HPC, with demonstrated scaling up to the Petascale level. InfiniBand architecture permits low latency implementations and has a bandwidth roadmap matching the capabilities of host processor technology. InfiniBand’s fabric architecture permits implementation and deployment of highly efficient fabrics, in a range of topologies, with congestion management and resiliency capabilities.

The InfiniBand community has demonstrated that the architecture has previously evolved to remain vibrant. The Technical Working Group is currently assessing architectural evolution to permit InfiniBand to continue to meet the needs of increasing system scale.

As we move towards an Exascale HPC environment with possibly purpose-built systems, the cluster computing model enabled by InfiniBand interconnects will remain a vital communications model capable of extending well into the Top500.

Lloyd Dickman

Technical Working Group, IBTA

(Note: This article appears with reprint permission of The Exascale Reporttm)